Why Business Data Science Irritates Me

Some thoughts following ryxcommar's post

disclaimer: Data Science is a pretty solid career compared to most others where you can earn a lot of money. This post is about my gripes as an industry insider, not intended to discourage anyone from pursuing it as a career. I’m forever grateful I have a job where I can do the one thing I enjoy doing and earn a solid living.

ryx recently wrote a great post (Goodbye, Data Science) going through why he “left” data science, and became more of an engineer. His post doesn’t have a single point, but goes through all the aspects of industry data science that drove him crazy. You should check it out.

I resonated with his post so much that I felt compelled to write my reasons for being frustrated with industry data science, and what I think you can practically do about it if you feel the same way. Having worked as a data scientist for seven years, I’ve grown weary.

Data Science

The standard new data scientist is typically an academically minded person who gave up about $500k in opportunity cost (versus had they gone into industry as a data analyst or software engineer) to first do academic research that qualifies them for roughly the same salary.

Unlike the software industry, which does actually have a well-established way of scoping problems and developing solutions that is relatively consistent across the industry, data science is still a new enough profession that these patterns haven’t been solved and standardized.

This results in a particularly large gap between what we can do, and what we are asked to do. Unlike software products that manifest as a tangible user-facing application or tool, data science often ends as a mercurial insight or recommendation in a way that is hard to tell if it’s real or fake.

I’m not optimistic that these issues will be solved to my standards anytime soon, since I see it as inextricably linked with being able to scale reasoning and correct forms of scientific inference across an entire firm. This is a problem that hasn’t been solved even within academia.

My Start in the Field

Let me take you back seven years ago, when I was starting out as an industry data scientist at your (least) favorite big tech company. We were building demand forecasts, which were actively used to run the business and make multi-billion dollar investment decisions. The stakes were high, and it was worth investing tens of millions of dollars into a team that could optimize these decisions.

I had just come from an academic role in economic forecasting and was eager to apply my skills for profit. Forecasting alway appealed to me as I have never been particularly interested in ‘telling stories with data.’ For every one good story that approximates reality, you get a hundred fake stories of people finding shapes in clouds.

I had started my path into understanding a deeper philosophy of science when I first read Hume, and from there it spiraled into recognizing the profound difficulty of scientific inference, statistical and causal inference, and understanding the world. With a forecast you make a claim about the future, and you can measure if you were right or wrong. I appreciated the straightforward nature of the exercise.

As I started I expected we would be building complex statistical models. The reality was different. Our forecasting models were remarkably simple. In fact, these simple models tend to be more accurate. The hard part for our team wasn’t the science, it was building a complete end-to-end data and software system that was fast, flexible, and could be easily modified. From the upstream data engineering, to the execution layer of the modeling, to our data model and front-end. There wasn’t a lot of room for mistakes.

The problem was well-defined, the science was straightforward, but the engineering was... well it wasn’t that hard, but it was the hardest part. So you would hope our team focused our efforts on building robust, correct, and scalable software systems — only that’s rarely how projects were created.

We would instead get insane requests from two groups: principal scientists who had come from academia, or senior business leadership, which would spin us off into huge failed projects, and prevent us from actually solving our core problem.

Insane Business Requests

The first large failed project was a request for us to attribute all forecasts to fundamental business drivers, and automatically explain the reason for all forecast misses. For some context, this would require a generic algorithm that could tell us both why shoe sales were too low in Italy, as well as why laptop sales were doing particularly well in the US.

We stopped our more important work on problems we could solve by properly engineering forecasting systems, and began to try and build models that would explain the forecasts in unrealistic detail for leadership. This project ended up as a disaster. The lessons were painful to learn. I hadn’t joined academia for a lot of reasons, but a big one was that I’m constitutionally incapable of misrepresenting what I believe is the scientific truth, even if it is in my own best interests.

Other data scientists might have just done something like fit a random forest on top of the forecasts with some noisy and incomplete business drivers as features, ignoring issues with statistical identifiability, stationarity, or anything else, and interpreted those features as causal drivers. They wouldn’t have even done it because they are liars, but because most data scientists never learned enough statistics to know you should not do this — and by should not, I mean that the answers won’t correspond to reality.

(As a defensive aside, I realize that sometimes quick and dirty analysis can be really useful for business partners by automating simple things, even if it’s not formally correct. This wasn’t one of those situations.)

But dammit, I wasn’t like that. I was going to set everything up statistically correctly, let the model learn the relationship, and report the parameter estimates to senior leadership, even if it meant I was going to report all null results, and my entire project failed.

If it sounds like I’m portraying myself as some upholder of scientific truth, no matter the consequences, I promise you that’s not what I was thinking at the time. I was just naive, stressed out, and never even realized I could have done something different like make a fake model that made everyone happy.

However, if this actually happened today, I’d politely tell them their ideas are bad in a well written document. If there was no getting out of it, I would just define some accounting identities that decompose errors into a few buckets by definition, and avoid doing actual statistical learning. If you know a question is ill-posed, it’s a waste of everyones time to actually build and estimate a model.

Eventually the project failed, a manager was fired, some senior leader who asked for it decided to pivot to another org, and I went back to what I was doing before, which was building good forecasting software, and adding marginal improvements to our existing models.

Insane Science Requests

The company I worked for did hire a series of accomplished academics, some of whom were even relatively famous. In a lot of cases, it was intellectually rewarding to talk to them, but their actual contributions to business data science were often misguided.

The charitable reason for this is that a lot of academic development is focused on trying to push the needle on cutting edge methods, which results in a lot of complex statistics and math, which wasn’t where we needed help.

The less charitable reason is that academics add a lot of needless complexity to their models and papers to show how clever they are. I think the true answer is somewhere in between, but what is definitely true is that when you’re getting paid a lot of money to solve a business problem fast, that’s not the right time to try and do unnecessarily clever modeling.

There are a few reasons why it’s usually not necessary. The first is the obvious one, which is that there are diminishing, and often negative, returns to model complexity. The OLS regression, or the XGboost model, in your first chapter of an econometrics or ML textbook is usually going to work better and be more robust than the fancy stuff in the later chapters.

The second is more subtle, which is that the marginal value of your time is usually better spent on improving your upstream data quality and data pipelines, as opposed to trying to squeeze out a few more bps of accuracy from your model. Data is terrible, always changing, and always wrong. Reducing your signal to noise ratio at that point in the pipeline usually pays more dividends than trying to more cleanly extract signal in your model.

The third is when you eventually leave your team, the fancy Bayesian structural time-series that you wrote from scratch (but didn’t properly version control) in order to have a more academically rigorous jointly estimated model and impress everyone, is inevitably going to break. When it does break, the data scientists still on the team probably won’t be experts on the mechanics. If it was a linear regression, or really any workhorse stats or ML model, they could jump in and fix it.

Most academics have never thought of these constraints and trade-offs before. I’ve worked with some who picked up on them very quickly and were a pleasure to work with, but I’ve also worked with some who drove everyone crazy with their academic performance of showing their intelligence.

Junior Scientists and Unnecessary Complexity

A lot of the criticisms of academics applies to junior scientists (myself included at one point). They have come from the same place. The good ones, which is in my experience about half of them, leave their ego at the door and are eager to learn how to be a good full stack data scientist. There are others, though, who are too precious to have to learn how to collaborate and build proper software.

Whether they have the right attitude or not, they almost always overcomplicate their projects. There is a similarity here to software engineers who spend all this time learning CS in their undergrad, and then learning algorithms, to eventually end up spending most of their time on CRUD apps, or dealing with cloud permissions and data migrations.

Scientists come in wanting to build cutting edge models, and most of the time we have to crush their dreams, and tell them to start by solving the problem with SQL, and if absolutely necessary, a linear regression. If we do end up using more complex ML, the hard part is going to be constructing the surrounding software system, and the actual model implementation will be a by-the-book approach, and constitutes 2-5% of our codebase by lines.

I remember a formative moment for me was when we had this edge-case that was occurring in a small number of our time-series (out of millions) where the forecasted growth rate was unreasonably high. My initial thought was we were going to have to think carefully about the statistics of the model and how to constrain the parameter space. My mentor at the time just added some new post-model validation module that would check for unreasonable cases, then constrain the forecast down, in a few hours.

Not only did he do it ten times faster than I would have, but his solution would now apply more generally to any forecast we produced, and wasn’t going to be specific to a certain statistical choice, which could easily change in the future. It wasn’t academically correct or impressive — but we didn’t need it to be.

In practice this means you need to keep an eagle eye on every junior scientist, and crush their dreams in science and code reviews. The really excellent ones will realize this is a new game that’s different from academia, and learn to have fun with the challenge of building complete systems, but some are grumpy about not always being able to work on cutting edge statistics or ML. They want both the high pay of the private sector, and the pure intellectual satisfaction of academia. If you’re particularly talented you maybe can get both in certain research teams or OpenAI or something, but that’s not the standard.

Given the rapid rise of this field, the demand for mentors with practitioners' intuition is far greater than the supply. As a result, mentors become overloaded, and new entrants to the field are unlikely to find a great mentor.

Insights are Fake

The business often wants ‘insights’ into their data. They often don’t know what they’re asking for, but they do know that data scientists can make plots and write queries.

The issue is that providing insights suffers from a principal-agent problem, because giving someone true insights into the data, will not be rewarded as well as giving someone nice intuitive insights. While you might believe some insights are truer than others, it’s often not something you can eventually check if you were right or not, since you don’t see a counterfactual. So answering the business “We can’t really use statistical learning here to help, here are the plots you should be looking at, I suggest you use your best business judgment” may look like a failure, even if it’s the correct answer.

Whereas the team that agrees to some insane request, fits some poorly identified gradient boosting model, interprets the feature importance causally, and then puts it in a slick dashboard will look better than a team that takes the correct approach. I have worked on teams that do take the correct approach, and certain companies sincerely work to have a culture that encourages this, but it is a constant struggle and not the norm.

Science is Hard

The issue of insights though, and most of what I wrote about so far, is they are all downstream of the fact that science is hard. The vast majority of people, even data scientists, don’t understand how to reason about the world scientifically. This is combined with the fact that the incentives to be right aren’t the same as the ones that help you progress in your career. The only people who get it right are the ones who are too disagreeable to handle things being incorrect, even when it works against their self-interest.

Science is hard in a different way than engineering is hard. Engineering, whether it’s building an airplane or maintaining cloud infrastructure, is very complicated. As an engineering system is incrementally built and scoped out, you nail down all the complicated variables. Engineering can still be brutally difficult, but the path forwards comes from resolving these complicated components. The difficulty of science arises from trying to pin down complex systems. This is why we use statistics to quantify our uncertainty, and make inferences under incomplete information.

Is it harder to measure the effect the minimum wage has, or build an airplane? Building an airplane is probably harder, but at least when you succeed you get to know you succeeded by flying in a plane. We have dedicated huge amounts of economics towards answering how the minimum wage impacts an economy, and depending on who you ask, you’ll get a different answer.

This difficulty in science more generally extends itself into data science. Knowing if we have an edge in solving a data science problem requires a sharply calibrated intuition regarding how statistical learning works, and what we can expect to extract from the information we have available.

Was your team asked if you can explain how a future unemployment shock will affect a forecast, when your company has only existed during a period of economic stability? You could explain that you can’t do better than some best guesses and a spreadsheet in two weeks, or you could write a bunch of over-engineered code over six months to pretend to simulate the future. I’ve seen this happen multiple times, in multiple companies. Not only will senior management not know the difference, but the truth is most data science management won’t either.

Consider another case: early covid inference, which gives us an excellent example case. It was a global data science problem we were all paying attention to at the same time, trying to get insights from data.

There were scientific research teams who were using clearly incorrect models to predict the pandemic. The Imperial Covid simulation was a behemoth of poorly managed C++ code with 940 parameters, with no claim to predictive validity, which was trying to pin down an extremely complex new outbreak without historical data. Phillip Lemoine articulated this in exhausting detail. Despite this, the model had a scientific veneer, was made by smart people, and gave policy makers a false sense of confidence.

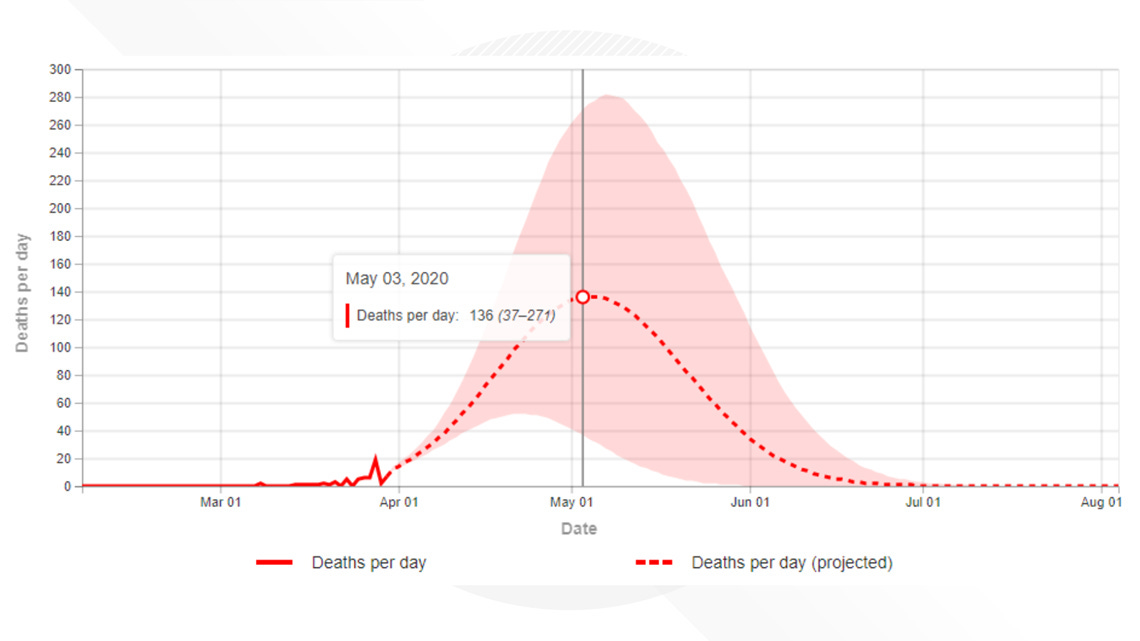

Don’t forget the original IHME model either, which was used for ‘crushing the curve.’ In what world did it ever make sense that deaths and cases would hit zero? We know from all our existing circulating coronaviruses that this isn’t how it works. We know from past pandemics they tend to take 2-7 years to become endemic. We had a completely immune naive population, yet somehow cases would drop and stay at zero? Yet they had one of the first, slick, visualization dashboards.

My perspective at the time was that we were so information constrained, there was no point in using anything other than the sample mean and some summary statistics. Stop trying to squeeze blood from a rock, and instead try to articulate your uncertainty.

My point here is that these were the models built by teams of researchers who should know better, and maybe more important than the supply of this research, this was the type of output demanded by consumers of models.

The business world is the same. The leaders of companies demand nice data science artifacts that provide intuitive projections of the future, and this is a field full of very academically intelligent managers in their late 20s who often pivoted from quantitative disciplines, where they did some applied statistics for a few years, who are happy to give it to them.

Robust, Systems Oriented, Data Science

With insane requests frequently coming from up top, and unnecessary complexity coming from below, what do you do? The smartest staff scientist I ever worked with once told me “My job is to tell junior scientists their ideas are bad, but they should feel good about them. And to tell senior leadership their ideas are bad, and they should feel bad about them.” This is career progression in industry data science.

The science doesn’t progressively get harder, unless you have a specific expertise and have found yourself in a research team. For most business data science, the return on improving the data, or reducing system complexity, almost always has a higher ROI than iterating on the specific statistical or ML model. This isn’t a rule, it’s just usually the case.

You instead become responsible for shielding junior scientists from terrible requests, and from preventing them from pushing terrible code to production. You’re rarely going to be implementing complex new models with your increased seniority. Instead your job is to help define KPIs and business metrics, and then align junior scientists to be in a position to execute on them, and make sure the technical solutions are correct.

What’s Next?

There are two ways out of this world. One is for our industry to mature out of it, and the other is a personal shift you can make with your own career.

In terms of our industry, we need to focus much more on communicating the benefits of sticking to problems with actual solutions, and then making marginal investments into robust, data-oriented software systems. As data scientists, this means we have to take systems engineering seriously.

Most scientific problems I’ve worked on could be solved by a correct representation of an empirical distribution in a histogram. The next largest group needed a linear regression. The final group needed a statistical model from the first chapter of a PhD textbook. When the scale of the project grew, it was never because the statistics got too difficult. It was because the scale of the software, the data intensiveness, the edge-case handling, ramped up.

Despite the models being straightforward, the engineering and systems surrounding it never are, and need a lot of careful craft. Or need to be built in the first place. This can involve specific types of scientific engineering as well — what are elegant ways to check for model failures in production? These are problems that you need scientific intuition to solve, but the solution looks like software engineering.

Unfortunately, many data scientists don’t recognize this or aren’t interested in improving their engineering skills. They instead continue to try and bring in overly complex and interesting models, and encapsulate them in poorly written code. Or depend on existing upstream data, rather than asking if the input data pipelines are even the correct ones. This often isn’t their fault, since career progression is built on top of launched models, not excruciatingly difficult but unimpressive sounding sentences like “Refactored upstream spark pipeline to expose two new customer metrics to downstream users.” Some particularly egregious scientists will even fight against using version-controlled python, and instead work in a notebook they don’t share with anyone else.

The optimistic take is that at the right companies you probably will be rewarded for moving the industry in the right direction. If you can kill projects immediately that you know will fail based on sharp intuition, simplify important projects, and communicate why to senior leadership, that’s going to be great for your career. This will require you moving out of an IC position though, and instead of being an applied scientist and engineer, becoming a manager and advocate.

Maybe you don’t want to do that. John Carmack recently resigned from Meta because he didn’t have the desire to stop coding and wasn’t interested in being a manager or senior leader.

The other approach is a career shift. ryx talks about shifting to a Data Engineer. My perspective here is conceptually the same, but slightly different, which is that I think remaining a scientist is fine, but switching to a more engineering focused team is the correct move. The job title here isn’t too important, whether it’s Data Engineer, Software Engineer, Machine Learning Engineer, or Data Scientist (with a focus on engineering).

While about half my job remains business data science, the other half is on building software to support not only the work I do, but the work of other internal data scientists. I plan on continuing this shift, and focusing more on building internal tools or software, and spending less time directly interfacing with the business. In this world I can continue to sharpen my technical skills, rather than focusing on building a career in people, communication and persuasion.

If you want to remain an IC, with your head in the code, incrementally shifting towards a more engineering focused type of science can be a good move, while taking advantage of your scientists intuition on how to not overcomplicate or over-engineer a solution.

From one “recovering data scientist” to another, thanks for writing this.

Thanks for writing this post - I can relate to it so much. I've been in the industry for almost four years with a master degree in computer science. I spent my first 3 years working as the type of scientists who focused on fitting models and building dashboards for the business, and I thought that's the norm. However I was tired of being the middleman (having hard dependency on data engineer to collect the data/software engineer to deploy the solution) plus my value was mostly measured by how many models I shipped (and it took forever to ship due to limited capacity of SDEs) so I was burnt out and left. Somehow I ended up in a team, in which I became a full stack DS, but the team has no ML at all. It's engineering heavy and sometimes I even forgot that I'm a scientist (though I do take care of the analytical improvement part). I had doubts in the path forward but after reading your post, it looks like I somewhat was on the right track (per my definition). At the end of the day, applied data science is not about using the SOTA models; instead, it's to provide values through data in a consistent manner, hence engineering skills are a must. Time to tidy up my codes again :)